AI software engineer with Metaprogramming

A London based company creating an autonomous AI software engineer is struggling to mantain consistency in the code their agent is producing and wasting hundreds of dollars per promt trying to solve it.

They are basically automating copy-pasting generated code from anLLM into your codebase, but it has a problem by design. We don’t truly understand what’s happening inside a neural network, and its outputs are inherently variable. Vibecoding enthusiasts are trying to solve this by stacking agents to control the output of previous agents. But guess what? No matter how much you reduce their "creativity," these models will never be fully deterministic making it expensive. Each layer of control is anothe request to another LLM.

Our approach

What if the LLM can write all the code you will need at once? With a single output there is no variation, so there is my bet!

For this we need to change one of the most sacred principles of software development THE YAGNI.

The YAGNI (You Aren't Gonna Need It) principle is a core concept in Extreme Programming (XP) and agile software development. It emphasizes that developers should not add functionality until it is demonstrably needed, rather than trying to anticipate future requirements.

While humans need code to be lean and easy to understand, so it can be explained and maintained, computers don’t care. They can generate all the code you might need in the future, all at once.

We’re no longer talking about XP or Agile.

We’re talking about Generic Programming and Metaprogramming — two powerful approaches that have existed for decades and delivered great results.

The catch?

They produce error messages that are notoriously hard for humans to debug, which has limited their adoption in the industry.

But again, we’re not talking about humans anymore.

Generic programming is a style of computer programming that allows the creation of reusable software components that can be applied to a wide variety of usages without needing to rewrite the underlying logic.

Metaprogramming is a programming technique where a program can treat source code as data, allowing it to generate, analyze, or modify it, and even modify itself while running. In essence, it's code that writes code.

A PRACTICAL SOLUTION

The user starts with a prompt describing the software they want to build — including the programming language, architecture, preferences, and a few other details we can’t reveal just yet.

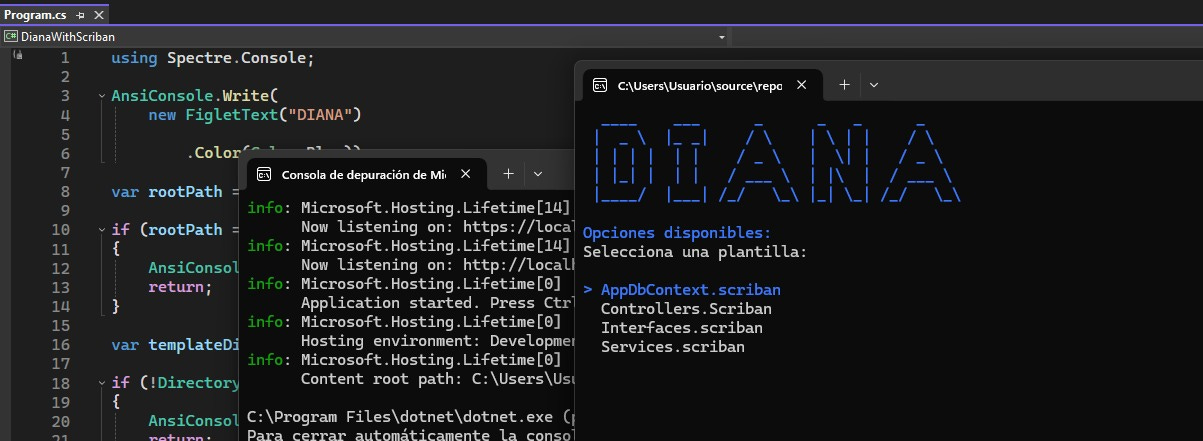

Instead of generating code directly, the LLM creates a code template using Scriban (we chose Scriban over Liquid for its flexibility and performance).

Then, a tool developed by Xipe’s engineers — called Diana — reads the template and programmatically generates both the code and its unit tests.

This approach:

Eliminates cryptic error messages for developers

Avoids stacking agents on top of agents

Drastically reduces the number of LLM calls needed

All while keeping developers in control.

WE CAN’T FORCE PEOPLE TO CHANGE HOW THEY CODE JUST TO MAKE OUR SOLUTION WORK.

We know trying to rewrite the Software Development Life Cycle — or betting against principles like YAGNI — is a losing game.

So instead, we built a Code Dispenser, and grounded it in something developers already understand: Test-Driven Development (TDD).

Test-driven development (TDD) is an approach to software development in which software tests are written before their corresponding functions. This way you know your team is writing the code porperly.

The Code Dispenser works through console commands, allowing developers to integrate only the parts of code they need — even when the full template scripts have already been generated. This means developers don’t need to radically change the way they think. (At least not right away — that comes with time.)

How does TDD help here?

When using a TDD approach, you typically spend time writing tests before implementation. With the Code Dispenser, that same time can be used with your LLM coach to define and refine the code templates. In other words, instead of just writing tests, you're shaping reusable patterns that satisfy those test expectations — automating both structure and quality from the start.

TL;DR

We are creating a new way the A.I. is used to generate code consistently, in budget, and according to the existitng developing practices. This is a high bet, cos in the A.I. world the rules are not written, and we are proposing ours!

Excellent read Guillermo and I love the detailed explanation